A year after Microsoft unveiled its big push into AI riding on the early wave of ChatGPT excitement, we haven’t come to terms with how AI fits into our world. Sorting it out will take time, but we need to start doing so with the assumption it is here to stay.

Ever since generative AI tools started to emerge into the mainstream a couple of years back, many have outright opposed AI for a variety of reasons. This intensified with ChatGPT’s arrival.

We’re quickly seeing AI used to spam us in new ways, creating more annoying junk phone calls and filling the web with useless content. There are artists and other creatives who see it as a significant threat and understandably so. And, some rightly wonder if the inevitably malevolent AI of sci-fi might soon be the AI of sci-drop-the-fi everyday reality.

These problems are real, but solving them requires that we ground ourselves in another “real” point: AI is not going to go away. We need to figure out how to live with it and use it for good. (Much as previous generations had to figure out how to live with the Industrial Revolution, the computer revolution and any other number of technological innovations throughout the ages.)

AI is already ubiquitous. Try to go just one day without being impacted by machine learning technology. Use a credit or debit card? They apply that tech to analyze whether the charge is legitimate. Go on social media? AI plays a role in what you see. Type a message on a phone? Autocorrect might make us doubt the “intelligence” of “artificial intelligence,” but working well is another matter.

Will AI generate nuisances we haven’t even dreamt of? Yes. As I made the point in my Monday night sermon this week, the history of technological misuse is as old as human innovation. Philosophers’ rejoinder is apropos: abusus non tollit usum (“abuse does not negate use”).

The solution to abuse of technology is to figure out how to harness it against those misuses. Regulating the problem out of existence may sound easier, but spammers and scammers (and worse) will not worry about regulations any more than a criminal frets over using an illegal weapon.

Handicapping law-abiding use of machine learning models only gives malevolent use a greater advantage. We are witnessing an arms race. If we fear AI, give the “good guys” the upper hand; don’t tie them up for easier punching by the bad guys.

So much more so for those who fear Terminator-style AI. As I’ve written about before, we will see the nations of the world racing to adopt AI technology. China, Iran, Russia and their friends will explore AI. The point about the “good guys” not being hamstrung is even more crucial when it comes to those who wish to kill us rather than merely steal our wallets.

Freedom-loving countries and individuals need to embrace AI. We want so many different AI systems, decentralized, and with no one having an upper hand, that destructive uses are curtailed.

(Discerning the “good guys” versus the “bad guys” is not always easy, but if technology is broadly available, open source whenever possible and legally unhindered, picking who should have the upper hand isn’t even required.)

There are indications of how this is happening in our world. Take the aforementioned premier of Microsoft’s AI technology. While Microsoft is not a traditional underdog, in search, its decent Bing search engine has been the unmistakable David to Google’s Goliath.

In traditional search engine development, catching up has become quixotic. Who can out-index Google, particularly when many sites refuse to talk to unknown bots that might represent a challenger-to-be? Changing the rules of the search game is the only way to dethrone King Google and that’s what Microsoft did. The company’s search-informed, ChatGPT-related chatbot, now dubbed “Copilot,” created the first genuine competitive threat to Google in well over a decade.

I remember in the early 2000’s when I would search on Google, the first few results were always really useful. Recent years have been far less kind to search results, between the intentional changes from Google (too many ads, like its long vanquished competitors) and the unintentional (“SEO” people moving faster to promote their junk than Google has moved to filter it).

The AI-ization of search Microsoft started last year is the first time in years that year-over-year search engine results haven’t been worse than the year prior. While Google may be given preferential treatment by many websites, Microsoft is leveraging its access to the best AI models to at least make the data it does have as useful as possible.

And it isn’t just Microsoft. Quora’s Poe provides a fantastic interface to a whole host of different AI tools useful for research. After I tooted about AI on Mastodon a few weeks ago, a reader pointed out to me Perplexity, which is impressive in how it approaches the task we would traditionally think of as search.

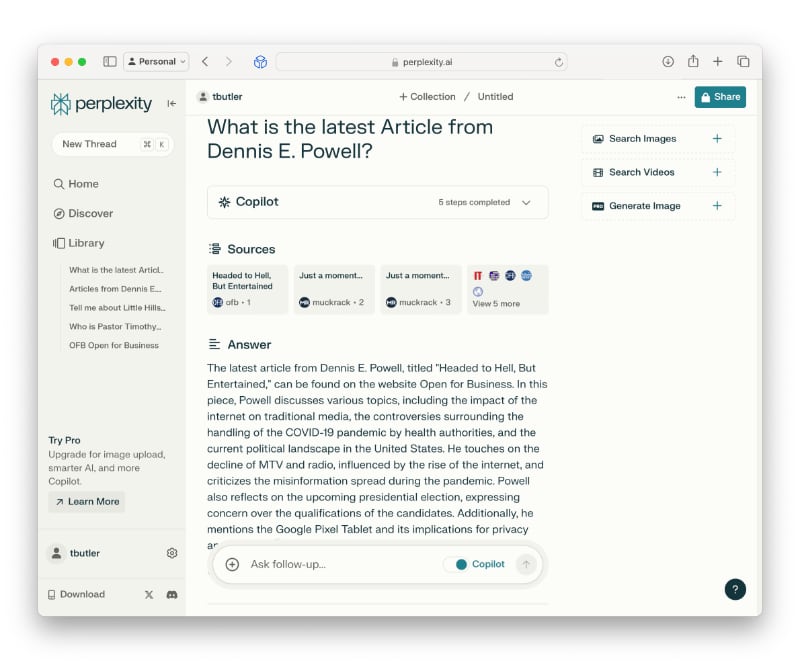

Consider: I typed “What is the latest article from Dennis E. Powell?” into Google, Bing and Perplexity. Google gave a link to Dennis’ author page here at OFB. Bing suggested articles about Fed Chair Jerome Powell. Perplexity referenced the title of Dennis’s article from last week (a.k.a. his most recent one of that moment) and gave a summary of it ahead of linking to OFB.

(Bing’s Copilot mode did try to do something similar, but picked an older article.)

True, a better search engine isn’t a defense against real-life cyborg assassins. Yet it gives us a taste of what AI-for-good can do. The democratization of technological advancement lets an upstart like Perplexity best the behemoths. And the smaller behemoth — Bing — at least can occasionally edge out Google.

That same sort of process can apply to everything from helping individual creators in the face of Big Media all the way up to warding off future Skynet killers. If freedom-loving countries are at the forefront of AI, they can answer when authoritarian AI comes calling. As I’ve argued before, if average joes like me have access to AI, the citizenry can also serve to check those freedom-loving countries from becoming more authoritarian.

AI carries risks. We should use caution. Rejecting or over-regulating it, though, is not tenable. Ask those who rejected the industrial revolution.

Full Disclosure: Tim owns a small amount of Microsoft (MSFT) stock.

Timothy R. Butler is Editor-in-Chief of Open for Business. He also serves as a pastor at Little Hills Church and FaithTree Christian Fellowship.

You need to be logged in if you wish to comment on this article. Sign in or sign up here.

Start the Conversation